You really should use dev containers

Intro

I rarely code, but when I do, it’s usually in Python. A few weeks ago I had to do a script to migrate all of a company’s CV data to another system via means of a database dump and hammering this data down an API. I was excited to get coding again, and as it usually goes with excitement, it was crushed by reality within mere 15 minutes as my Python development environment came to halt.

I started by testing the API, which required a package called requests. Instead of pip doing it’s thing, installing the package, it threw up screen full of error messages. After two hours of troubleshooting the issue was somehow related to Xcode in macOS Apple Big Sur, possibly having something to do with having multiple Python environments installed with brew overlapping with the existence of pyenv and also quite possibly: aliens. I eventually resorted to uninstalled every Python version apart from the macOS native (Python 2), installed new Xcode, and had to put some dark magic spells on my .zshrc (allowing building Python versions, something which had also broken down with Xcode). After the purge, installed only the 3.8.5 version of python with pyenv, allowing me to run pip install -r requests.txt with success.

As I really didn’t learn anything new from the troubleshooting process, the history was bound to repeat itself unless something would change.

Enter: dev containers

TOC

Dev Containers

Short for development containers, indicating the act of running and compiling code inside a container, not on the host machine OS.

(few) benefits

- Separate OS and Dev Environment

- The coding environment is tailored for your code: no multiple versions of compilers/interpreters of package managers (as in the case of Python) conflict with each other

- The environment is (mostly) free of problems of your OS (such as the Xcode problems)

- Your OS stays clean of things you have to install to get your project running

- The environment is portable and consistent

- Teams can dev on the exact same environment

- The same container can be used on the CI/CD pipeline to build your code

- Works in your machine -> should now also work in dev/prod

- Even the local env can be codified!

- .. and analyzed

- .. and versioned

Dev Containers in VSCode

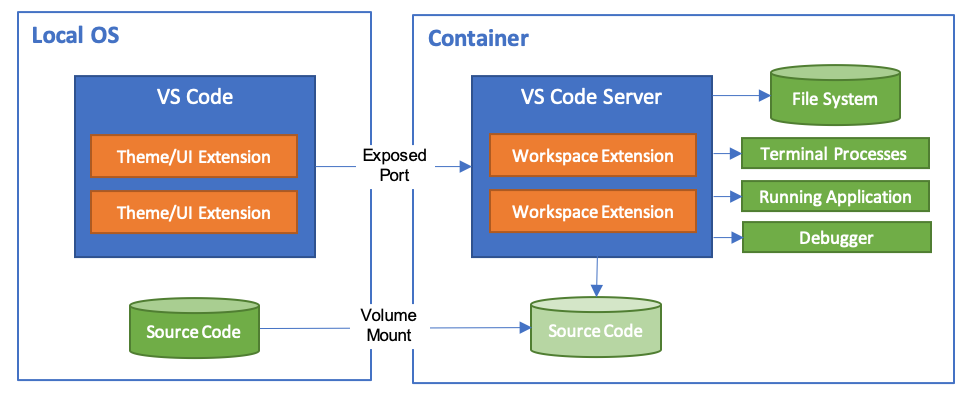

The functionality of developing inside a container in VSCode is achieved through a plugin called Visual Studio Code Remote - Containers).

This plugin allows you to run the development container in VS code, with your code mounted inside, so that the VS code server and extensions are also run within the container. Everything, except for the UI elements, nicely separated from the OS.

Which is splendid, but the real magic lies in how easy it is to use the containerized development environment: it doesn’t differ from normal usage of VSCode (running directly on your OS) at all!

Defining the environment

The environment is defined by two files:

Dockerfile, defining the actual container where your code is rundevcontainer.jsontelling VSCode how to run your container

You can find ready-made Dockerfiles and devcontainer.jsons from Microsoft’s vscode-dev-containers repo, allowing you to skip the (rather tedious) process of writing these files from scratch.

Requirements

To run your dev environment within a container inside Visual Studio Code you need:

- Installation of the remote containers plugin

- File structure

├── .devcontainer

│ ├── Dockerfile

│ └── devcontainer.json

Even though it’s fast to get a dev environment running this way, I strongly suggest reserving few hours to familiarize yourself with the documentation and the deployment configurations.

The Python Case, simplified

I grabbed a Python 3 dev container definitions from Microsoft’s repo, and modified the devcontainer.json just slightly:

Dockerfileas the dockerfile instead ofbase.Dockerfile(for even more simplicity)INSTALL_NODEas false (no need for node in my case)VARIANTas 3.8

This resulted in my devcontainer.json looking like this:

{

"name": "Python 3",

"build": {

"dockerfile": "Dockerfile",

"context": "..",

"args": {

// Update 'VARIANT' to pick a Python version: 3, 3.6, 3.7, 3.8, 3.9

"VARIANT": "3.8",

// Options

"INSTALL_NODE": "false",

"NODE_VERSION": "lts/*"

}

},

...

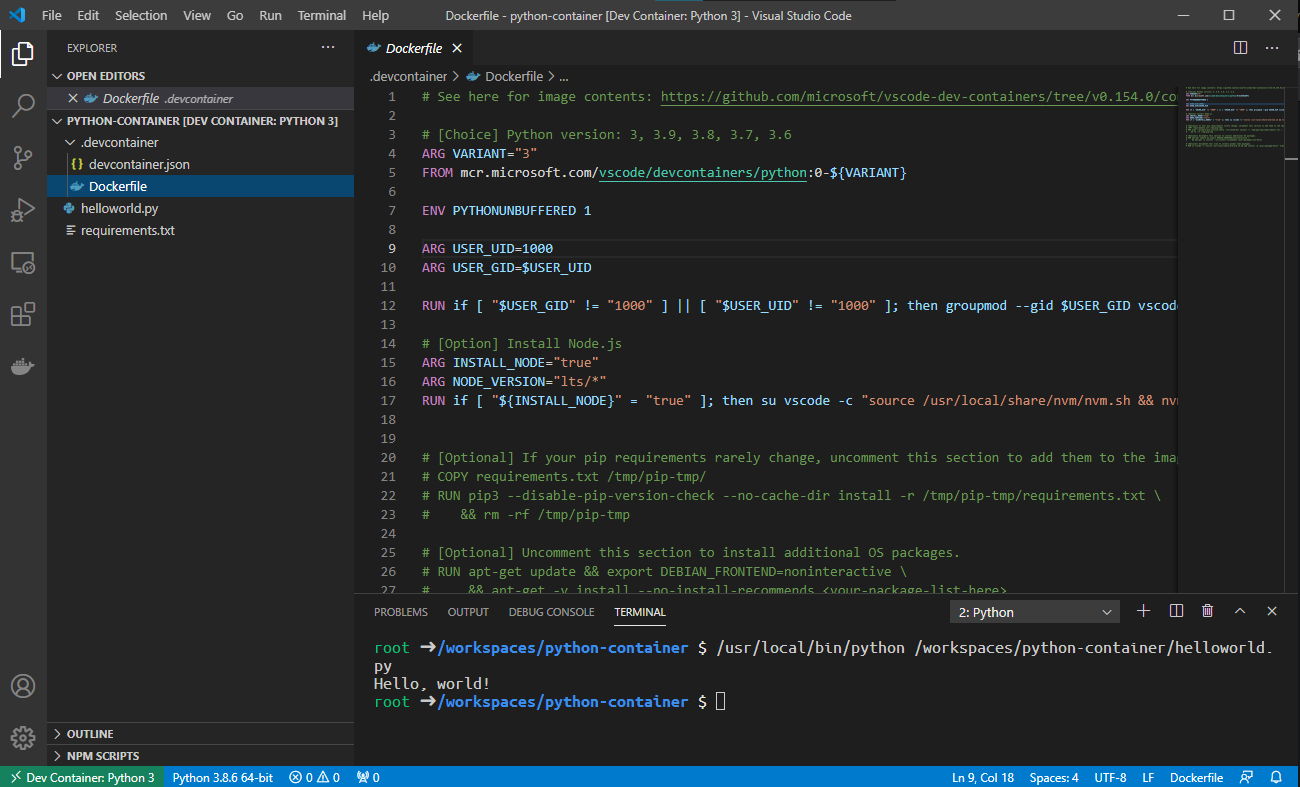

I also uncommented a section from the Dockerfile allowing requirements to be automatically installed on the container during the build:

# [Choice] Python version: 3, 3.9, 3.8, 3.7, 3.6

ARG VARIANT=3

FROM mcr.microsoft.com/vscode/devcontainers/python:${VARIANT}

# [Option] Install Node.js

ARG INSTALL_NODE="true"

ARG NODE_VERSION="lts/*"

RUN if [ "${INSTALL_NODE}" = "true" ]; then su vscode -c "umask 0002 && . /usr/local/share/nvm/nvm.sh && nvm install ${NODE_VERSION} 2>&1"; fi

# [Optional] If your pip requirements rarely change, uncomment this section to add them to the image.

COPY requirements.txt /tmp/pip-tmp/

RUN pip3 --disable-pip-version-check --no-cache-dir install -r /tmp/pip-tmp/requirements.txt \

&& rm -rf /tmp/pip-tmp

After that, I used Remote-containers: Open folder in container from the command palette to open the folder inside a container. This action resulted in the container image being built and the VSCode session was opened inside of it. On the bottom left of the VSCode window, there was now a green area with the text “Dev Container: Python 3” reminding me that it was now working within a container.

Getting my Python project running inside a dev container took around the same time as debugging the weird pip problem I ranted about in Intro. The difference is that now anyone in my company could just do a git clone on the repo and get it up & running. This was also a learning experience, so next time I would do Python (or any other coding language) I have now an easy method to isolate the dev environment from the rest of my setup.